It is easy to blame people for security incidents, and this happens a lot. I believe this is an area where the cyber security field still needs to mature, because simply saying it’s down to human error won’t get us anywhere.

James Reason, a professor of psychology at University of Manchester, has studied the various ways things can go wrong when reliability depends on humans. Although his work is primarily applied in safety critical contexts, such as process plants, aviation and healthcare, I find his principles highly relevant for cyber security as well.

First comes the class of inadvertent errors, since there are no deliberate bad intentions behind the action causing them:

- Slip: A frequent action, which requires little conscious attention, goes wrong.

- Lapse: A particular action was omitted because it was forgotten.

- Rule based mistake: A routine is followed, but a good role is used wrong or a bad rule is applied.

- Knowledge based mistake: A routine is not available, and your application of knowledge and experience is not sufficient to carry out the action safely.

Slips and lapses are actions which went wrong, i.e. not according to plan. An example would be accessing company resources, but over a public WiFi which is compromised by an attacker. A possible mitigation here is to deny access from outside the company’s network — offering VPN access could still provide access to the required resources. However, HTTPS should be used for any website, and when applied correctly the site is safe to use even on public WiFi.

Mistakes are done based on a conscious plan which was flawed, i.e. the correct actions, but according to the wrong plan. For example if your colleague tries to encrypt a highly sensitive email, but still can’t get it right, and the message is sent all in the open. While I do not have high hopes for the usability of encrypting email, relevant countermeasures in these cases include human centered design and detecting errors automatically to warn the end user. We also have an opportunity to offer employees better ways of exchanging sensitive information than via email, both in terms of security and usability.

On the other hand, we have the deliberate actions which cause non-compliance. Such actions, also known as violations, can be categorised as:

- Routine: A rule is so poorly implemented that its omission has become the norm.

- Situational: A shortcut is sometimes taken to get the job done.

- Exceptional: A calculated risk is taken due to special circumstances, to solve an otherwise impossible task.

Routine violations boil down to problems with how rules are communicated, purposed and supported by technical systems. Maybe aren’t people aware of the rule, or at least not why the rule is a rule in the first place. If there is an acceptable reason for it, it becomes socially unacceptable to break the rule. The norms embedded in company culture play a crucial role for secure behaviour. But if all we have is a bad rule, such as denying employees access to social media in the name of information security, violations will spread on to people break other rules as well. We need to fix the bad rule before our entire culture is compromised.

Situational violations are often a result of time or resource constraints, stress or a lack of proper tools to get the job done. Take an example from a software development context, where this could mean a release is shipped without having it tested for security bugs. This problem could somehow be mitigated technically with integrating automated security tests in the release pipeline. The real problem may however be a more cultural one: Unrealistic workload and deadlines, with security as an afterthought. People’s perception of risk may be inaccurate here, and security training can be very effective in combination with security aware leadership.

Exceptional violations may include that email you received that you just had to check out. While this case is quite common and not really “exceptional”, the context provided by the email may have been sensational enough to have you click a malicious link or open a virus-infected attachment. Maybe you even respond promptly to that request for transferring money to a foreign bank account —because it was requested by the “CEO”, after all. This is a challenging area to mitigate, because obviously no technology vendor has entirely solved the malicious email problem yet. It is also impossible to guarantee that no employee will be tricked by an email no matter how much security awareness and training you equip them with. However, the way IT are able to support people in questioning the authenticity of an email can be of essence here. Although some kind of “spam hotline” may be resource demanding, these interactions can provide an invaluable opportunity for IT/security to build trust with other employees in a positive, supportive manner. And it provides people with a viable alternative to just clicking the link or attachment, for figuring out whether the email is trustworthy or not.

“The stories we hear about human “zeros” will never compete with the number of unsung heros.”

There are several reasons why people are prone to error. In fact, this even applies to cyber professionals like us, for instance when it comes down to dealing with these human factors of security. Danielle Kingsbury has written a post on cognitive biases in risk assessment, which illustrates how we (security professionals) easily can believe we see the true picture when in fact we do not. The challenge remains in several of these areas of failure to consider the people involved not just as reasons for failure, but as genuine human beings. We all make mistakes, slip, experience memory lapses and have biases. Sometimes we even violate rules. But we still create great value for the organisations which employ us.

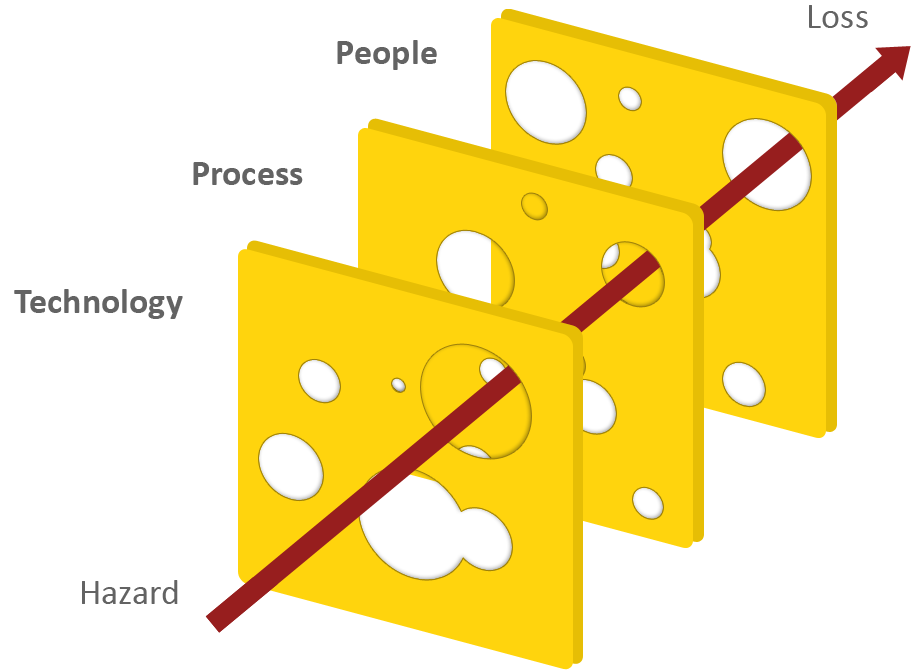

An important finding related to human errors research is precisely this: No major accident has ever been caused by a single error alone. The swiss cheese metaphor below highlights this. Some holes are due to active failures, while other holes are latent conditions. Our security strategy must take all into account:

This is why we should always apply a barrier based approach to security. We have some technological barriers, and we have some barriers in our processes. And then finally, we have people as barriers — yes indeed, people aren’t just big holes in the cheese! People are the ones who are able to improvise when the unforeseen happens and all else fails. Quite often this goes very well, yet sometimes it does not. But failure was then due to the alignment of several holes through all layers, and not only the human one in the end. And the stories we hear about human “zeros” will never compete with the number of unsung heroes.

Photo and illustration: Erlend Andreas Gjære

(This post has also been translated to Portuguese by Afonso Aves, thank you!)

PS: If you are looking for actionable advice to avoid common human errors in cyber security, my company Secure Practice can help. Drop us a line at [email protected] and we’ll get in touch!